TO Migrate the database files from disk

to asm disk is as follows,

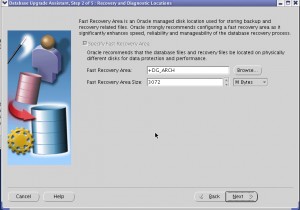

1.configure flash recovery area.

2.Migrate datafiles to ASM.

3.Control file to ASM.

4.Create Temporary tablespace.

5.Migrate Redo logfiles

6.Migrate spfile to ASM.

step 1:Configure flash recovery area.

over to ASM, the old data files are no longer

needed and can be removed. Your single-instance

database is now running on ASM!

to asm disk is as follows,

1.configure flash recovery area.

2.Migrate datafiles to ASM.

3.Control file to ASM.

4.Create Temporary tablespace.

5.Migrate Redo logfiles

6.Migrate spfile to ASM.

step 1:Configure flash recovery area.

SQL> connect sys/sys@prod1 as sysdba

Connected.

SQL> alter database disable block change tracking;

Database altered.

SQL> alter system set db_recovery_file_dest_size=500m;

System altered.

SQL> alter system set db_recovery_file_dest=’+RECOVERYDEST’;

System altered

step 2 and 3: Migrate data files and control file

to ASM.

use RMAN to migrate the data files to ASM disk groups. All data files will be migrated to the newly created disk group, DATA SQL> alter system set db_create_file_dest='+DATA'; System altered. SQL> alter system set control_files='+DATA/ctf1.dbf' scope=spfile; System altered. SQL> shu immediate [oracle@rac1 bin]$ ./rman target / RMAN> startup nomount Oracle instance started RMAN> restore controlfile from '/u01/new/oracle/oradata/mydb/control01.ctl'; Starting restore at 08-DEC-09 allocated channel: ORA_DISK_1 channel ORA_DISK_1: SID=146 device type=DISK channel ORA_DISK_1: copied control file copy output file name=+DATA/ctf1.dbf Finished restore at 08-DEC-09 RMAN> alter database mount; database mounted released channel: ORA_DISK_1 RMAN> backup as copy database format '+DATA'; Starting backup at 08-DEC-09 allocated channel: ORA_DISK_1 channel ORA_DISK_1: SID=146 device type=DISK channel ORA_DISK_1: starting datafile copy input datafile file number=00001 name=/u01/new/oracle/oradata/mydb/system01.dbf output file name=+DATA/mydb/datafile/system.257.705063763 tag=TAG20091208T110241 RECID=1 STAMP=705064274 channel ORA_DISK_1: datafile copy complete, elapsed time: 00:08:39 channel ORA_DISK_1: starting datafile copy input datafile file number=00002 name=/u01/new/oracle/oradata/mydb/sysaux01.dbf output file name=+DATA/mydb/datafile/sysaux.258.705064283 tag=TAG20091208T110241 RECID=2 STAMP=705064812 channel ORA_DISK_1: datafile copy complete, elapsed time: 00:08:56 channel ORA_DISK_1: starting datafile copy input datafile file number=00003 name=/u01/new/oracle/oradata/mydb/undotbs01.dbf output file name=+DATA/mydb/datafile/undotbs1.259.705064821 tag=TAG20091208T110241 RECID=3 STAMP=705064897 channel ORA_DISK_1: datafile copy complete, elapsed time: 00:01:25 channel ORA_DISK_1: starting datafile copy copying current control file output file name=+DATA/mydb/controlfile/backup.260.705064907 tag=TAG20091208T110241 RECID=4 STAMP=705064912 channel ORA_DISK_1: datafile copy complete, elapsed time: 00:00:07 channel ORA_DISK_1: starting datafile copy input datafile file number=00004 name=/u01/new/oracle/oradata/mydb/users01.dbf output file name=+DATA/mydb/datafile/users.261.705064915 tag=TAG20091208T110241 RECID=5 STAMP=705064915 channel ORA_DISK_1: datafile copy complete, elapsed time: 00:00:03 channel ORA_DISK_1: starting full datafile backup set channel ORA_DISK_1: specifying datafile(s) in backup set including current SPFILE in backup set channel ORA_DISK_1: starting piece 1 at 08-DEC-09 channel ORA_DISK_1: finished piece 1 at 08-DEC-09 piece handle=+DATA/mydb/backupset/2009_12_08/nnsnf0_tag20091208t110241_0.262.705064919 tag=TAG20091208T110241 comment=NONE channel ORA_DISK_1: backup set complete, elapsed time: 00:00:01 Finished backup at 08-DEC-09 RMAN> switch database to copy; datafile 1 switched to datafile copy "+DATA/mydb/datafile/system.257.705063763" datafile 2 switched to datafile copy "+DATA/mydb/datafile/sysaux.258.705064283" datafile 3 switched to datafile copy "+DATA/mydb/datafile/undotbs1.259.705064821" datafile 4 switched to datafile copy "+DATA/mydb/datafile/users.261.705064915" RMAN> alter database open; database opened RMAN> exit Recovery Manager complete. SQL> conn sys/oracle as sysdba Connected. SQL> select tablespace_name,file_name from dba_data_files; TABLESPACE_NAME FILE_NAME ------------------------------ --------------------------------------------- USERS +DATA/mydb/datafile/users.261.705064915 UNDOTBS1 +DATA/mydb/datafile/undotbs1.259.705064821 SYSAUX +DATA/mydb/datafile/sysaux.258.705064283 SYSTEM +DATA/mydb/datafile/system.257.705063763 SQL> select name from v$controlfile; NAME ---- +DATA/ctf1.dbf NO 16384 594 step 4:Migrate temp tablespace to ASM. SQL> alter tablespace temp add tempfile size 100m; Tablespace altered. SQL> select file_name from dba_temp_files; FILE_NAME --------------------------------------------- +DATA/mydb/tempfile/temp.263.705065455 otherwise, Create temporary tablespace in ASM disk group. SQL> CREATE TABLESPACE temp1 TEMPFILE ‘+diskgroup1’; SQL> alter database default temporary tablespace temp1; Database altered. step 5:Migrate redo logs to ASM. SQL> select member,group# from v$logfile; MEMBER GROUP# -------------------------------------------------- ---------- /u01/new/oracle/oradata/mydb/redo03.log 3 /u01/new/oracle/oradata/mydb/redo02.log 2 /u01/new/oracle/oradata/mydb/redo01.log 1 SQL> alter database add logfile group 4 size 5m; Database altered. SQL> alter database add logfile group 5 size 5m; Database altered. SQL> alter database add logfile group 6 size 5m; Database altered. SQL> select member,group# from v$logfile; MEMBER GROUP# -------------------------------------------------- ---------- /u01/new/oracle/oradata/mydb/redo03.log 3 /u01/new/oracle/oradata/mydb/redo02.log 2 /u01/new/oracle/oradata/mydb/redo01.log 1 +DATA/mydb/onlinelog/group_4.264.705065691 4 +DATA/mydb/onlinelog/group_5.265.705065703 5 +DATA/mydb/onlinelog/group_6.266.705065719 6 SQL> alter system switch logfile; System altered. SQL> alter database drop logfile group 2; Database altered. SQL> alter database drop logfile group 3; Database altered. SQL> alter database drop logfile group 4; Database altered. SQL> alter database drop logfile group 1; Database altered.Add additional control file.

If an additional control file is required for redundancy,

you can create it in ASM as you would on any other filesystem.

SQL> connect sys/sys@prod1 as sysdba

Connected to an idle instance.

SQL> startup mount

ORACLE instance started.

SQL> alter database backup controlfile to '+DATA/cf2.dbf';

Database altered.

SQL> alter system set control_files='+DATA/cf1.dbf '

,'+DATA/cf2.dbf' scope=spfile;

System altered.

SQL> shutdown immediate;

ORA-01109: database not open

Database dismounted.

ORACLE instance shut down.

SQL> startup

ORACLE instance started.

SQL> select name from v$controlfile;

NAME

---------------------------------------

+DATA/cf1.dbf

+DATA/cf2.dbf

step 6:Migrate spfile to ASM:

Create a copy of the SPFILE in the ASM disk group.

In this example, the SPFILE for the migrated database will be stored as +DISK/spfile.

If the database is using an SPFILE already, then run these commands:

run {

BACKUP AS BACKUPSET SPFILE;

RESTORE SPFILE TO "+DISK/spfile";

}

If you are not using an SPFILE, then use CREATE SPFILE

from SQL*Plus to create the new SPFILE in ASM.

For example, if your parameter file is called /private/init.ora,

use the following command:

SQL> create spfile='+DISK/spfile' from pfile='/private/init.ora';

After successfully migrating all the data filesover to ASM, the old data files are no longer

needed and can be removed. Your single-instance

database is now running on ASM!